Release blog 2025.3 - The Autumn Release

Axual 2025.3 release introduces KSML 1.1 integration for automated stream processing deployment, group-based resource filtering for multi-team governance, and experimental MCP Server for AI-driven platform operations. Includes JSON schema support, Protobuf processing (beta), and enhanced audit tracking for enterprise Kafka implementations.

On this page

Release blog 2025.3 - The Autumn Release

Axual 2025.3 addresses the governance complexities that emerge as Kafka implementations scale across enterprise teams.

As streaming platforms grow beyond single-team deployments, maintaining visibility and control becomes increasingly challenging. This release focuses on streamlining stream processing deployment, enhancing resource visibility, and exploring AI-driven platform interaction.

The integrated KSML 1.1 workflow enables teams to deploy stream processing applications with automatic authentication configuration and multi-format schema support, reducing deployment overhead while maintaining centralized governance.

Enhanced self-service capabilities provide group-based resource filtering and expanded audit tracking, ensuring teams can manage their resources independently while maintaining visibility across the platform.

Additionally, our experimental MCP Server allows natural language interaction with your streaming platform, enabling teams to query resources and deploy applications through AI agents while preserving authentication and governance controls.

These capabilities build on Axual's foundation of enterprise streaming governance, providing the control and visibility required for mission-critical data infrastructure.

KSML 1.1 with improved documentation

KSML is a wrapper language and interpreter around Kafka Streams that lets you express any topology in a simple YAML syntax. Simply define your topology as a processing pipeline with a series of steps that your data passes through. Your custom functions can be expressed inline in Python. KSML reads your definition and constructs the topology dynamically via the Kafka Streams DSL.

V2: KSML is a declarative wrapper around Kafka Streams that enables teams to define streaming topologies using YAML syntax instead of compiled Java code. Custom processing functions are written in Python and embedded directly in the topology definition, allowing iteration without deployment cycles.

With KSML, you can:

- Define streaming data pipelines using declarative YAML syntax

- Implement custom processing logic using embedded Python functions

- Deploy applications without compiling Java code

- Rapidly prototype and iterate on streaming applications

Version 1.1.0 brings new functionality for:

- JSON schema support - Validate structured data against schemas during processing

- Protobuf support (BETA) - Enables Protobuf-based message processing

- State store management

- Improved type system - Enhanced support for lists, tuples, and maps in processing logic

- Batch message production - Producers can now emit multiple messages in a single operation

- Multiple bug fixes and stability improvements

Breaking change: Aggregate operations now require explicit state store configuration. See the migration guide in the complete release notes.

You can read the complete KSML 1.1 release notes here or dive into the documentation.

Why teams choose KSML

KSML expands access to stream processing across development teams. The YAML-based definitions and Python functions eliminate Java compilation requirements, enabling faster iteration on streaming topologies.

Its main benefits are:

- Simplified Development

KSML dramatically reduces the complexity of building Kafka Streams applications. Instead of writing hundreds of lines of Java code, you can define your streaming logic in a concise YAML file with embedded Python functions. - No Java Required

While Kafka Streams is a powerful Java library, KSML eliminates the need to write Java code. This opens up Kafka Streams to data engineers, analysts, and other professionals who may not have Java expertise. - Rapid Prototyping

KSML allows you to quickly prototype and test streaming applications. Changes can be made to your KSML definition and deployed immediately, without a compile-package-deploy cycle. - Full Access to Kafka Streams Capabilities

KSML provides access to the full power of Kafka Streams, including:- Stateless operations (map, filter, etc.)

- Stateful operations (aggregate, count, etc.)

- Windowing operations

- Stream and table joins

- And more

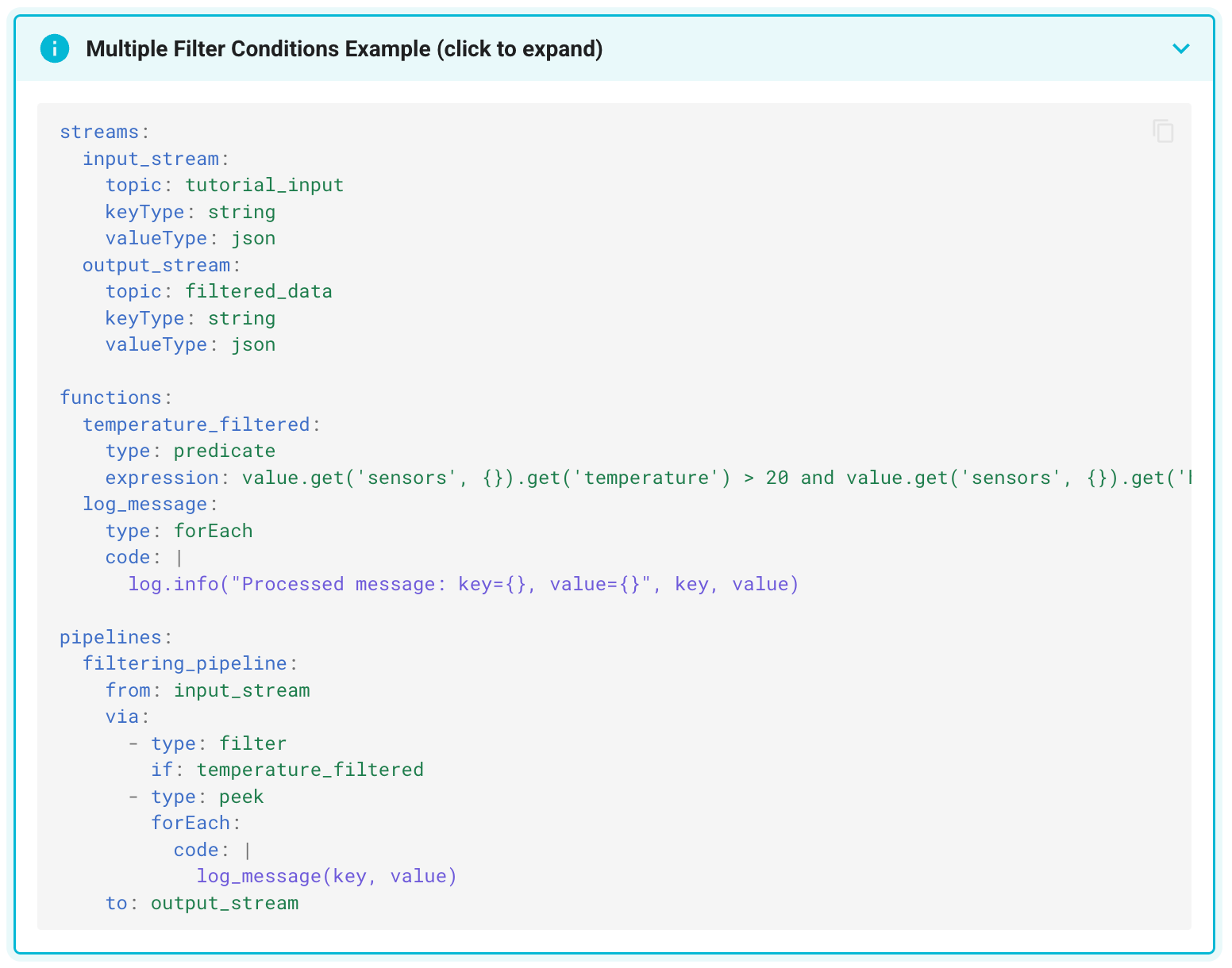

An example of a KSML Temperature Monitoring app is shown in the following picture:

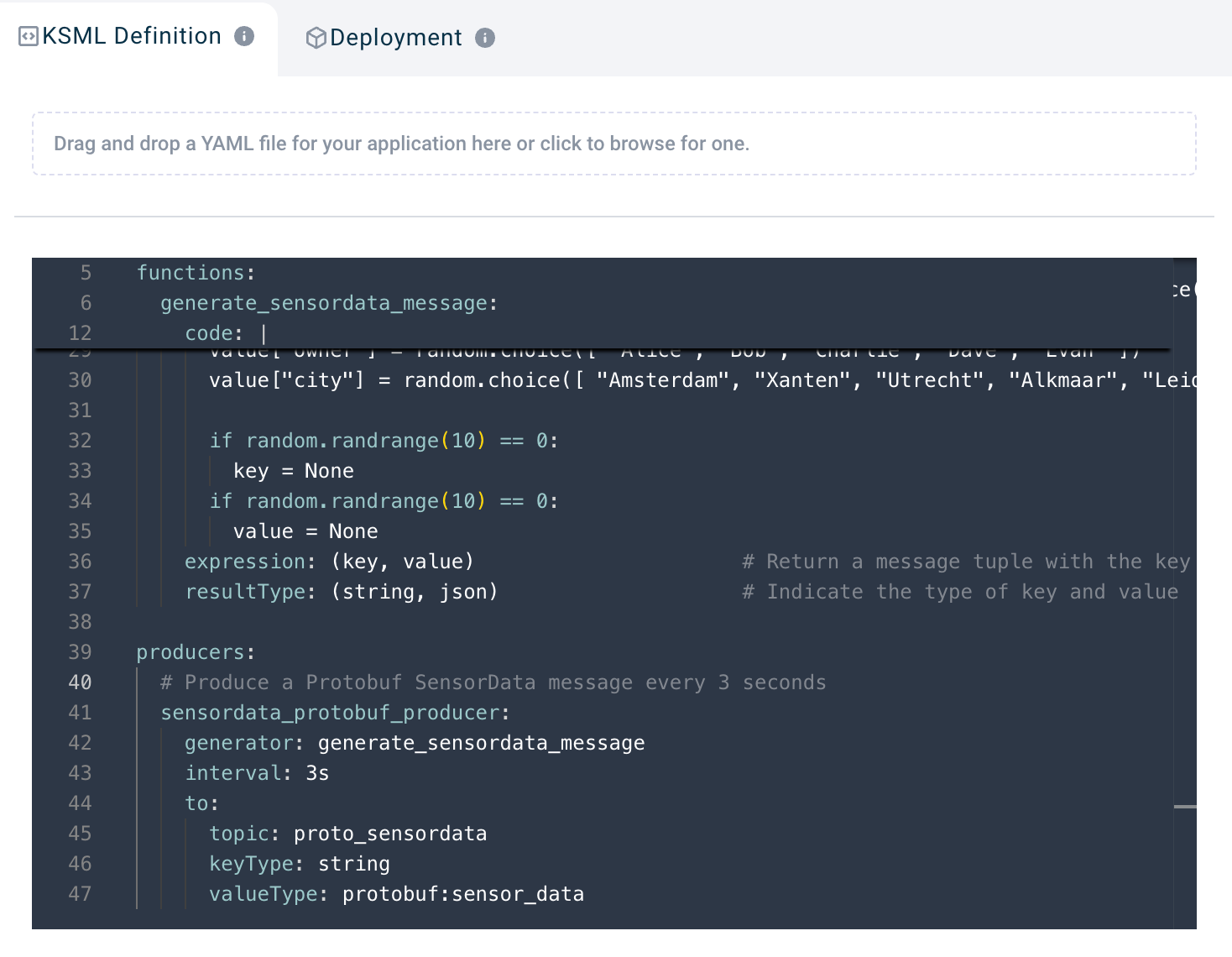

Self-Service improvements: KSML 1.1.0 integration

The KSML 1.1.0 integration with Self-Service automates authentication and connection configuration for KSML applications. Application owners can select their preferred authentication method (SASL credentials or mTLS certificates), and the platform automatically provisions the appropriate Kafka bootstrap servers and security configuration. The integration supports all Schema Registries available in KSML 1.1.0, enabling KSML applications to process messages in multiple schema formats.

Who benefits from KSML?

This integration benefits teams running KSML applications through Axual’s managed platform. Application owners gain automated security provisioning and multi-format schema support without manual bootstrap server configuration or credential management. Platform administrators maintain centralized governance over authentication methods and schema registry access while enabling self-service deployment of stream processing applications.

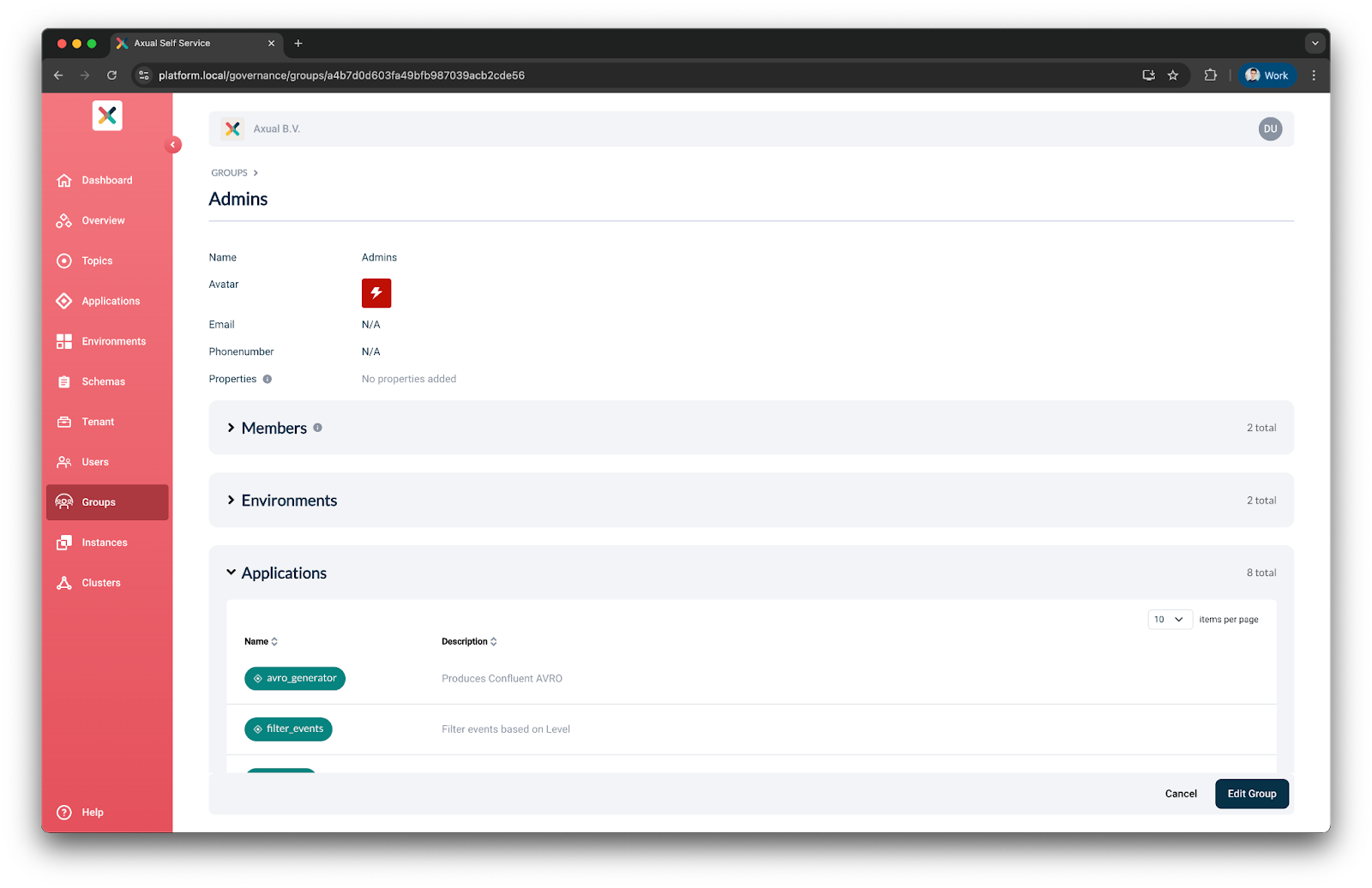

Self-Service improvements: Group owned resources

As streaming platforms grow, tracking resource ownership across teams becomes complex. Groups accumulate topics, applications, and connectors over time, making cleanup operations and compliance audits difficult. The group owned resources view provides a filtered inventory of all resources owned by a specific group. This enables teams to quickly assess their resource footprint, identify unused resources and maintain an accurate view of their streaming infrastructure ownership.

Benefits for Team Resource Ownership and Platform Governance

Group members use this view to manage their team's streaming resources and perform regular cleanup of deprecated topics and applications. Platform administrators gain visibility into resource distribution across teams for capacity planning and governance reviews. The consolidated view also supports compliance requirements by documenting which organizational units own specific data streams and processing applications, maintaining clear ownership records for audit purposes.

Experimental: Axual MCP Server

AI-Driven platform management with MCP Server

The Axual MCP Server implements the Model Context Protocol, enabling AI agents like Claude, Cursor, and other MCP-compliant clients to interact with Axual's management API through natural language. This experimental integration allows teams to perform platform operations through conversational interfaces while maintaining Axual's authentication and authorization controls. The MCP Server translates natural language requests into authenticated API calls, enabling tasks such as topic creation, application registration, KSML deployment, and resource queries without directly invoking the API. All operations respect existing role-based access controls and audit logging, ensuring governance policies are enforced regardless of the interface used. The server is available on request for both Axual Cloud and self-managed Axual Platform deployments.

Detailed demo videos will be available in the near future.

Use Cases for Platform Operations and Automation

The Axual MCP server is built for anyone who needs to operate, automate, or prototype workflows against Axual’s streaming platform without wrestling with low-level APIs:

- Data engineers who want to create and manage Kafka topics, stream processing jobs, and connectors quickly using natural language or agent-driven workflows.

- Product managers and analysts who want to prototype production operations or ask the system questions like “show me unused topics” without deep CLI knowledge.

General Improvements

In our release notes, you will find other, more minor, updates to our product, which we are continuously improving with your feedback.

Begin your Kafka journey with Axual

Inspired by what you've read? Facing challenges with Kafka in an enterprise environment? We're here to assist. Here are your next steps:

Request a demo and talk to our experts

Axual 2025.3 provides the governance controls and operational visibility required for enterprise streaming platforms that span multiple teams and compliance requirements. The enhanced self-service capabilities, integrated KSML workflows, and expanded audit tracking ensure your Kafka infrastructure maintains control without sacrificing development agility.

Ready to strengthen governance across your streaming infrastructure? Contact us today to explore how Axual 2025.3 addresses the compliance and operational challenges facing enterprise Kafka implementations.

Answers to your questions about Axual’s All-in-one Kafka Platform

Are you curious about our All-in-one Kafka platform? Dive into our FAQs

for all the details you need, and find the answers to your burning questions.

Related blogs

Axual 2025.4, the Winter Release, expands on the governance and self-service foundations of 2025.3 with improved KSML monitoring and state management, an enhanced Schema Catalog, and usability improvements across Self-Service and the platform.

The Axual 2025.2 summer release delivers targeted improvements for enterprise-grade Kafka deployments. In this post, we walk through the latest updates—from enhanced audit tracking and OAuth support in the REST Proxy to smarter stream processing controls in KSML. These features are designed to solve the real-world governance, security, and operational challenges enterprises face when scaling Kafka across teams and systems.

Axual 2025.1 is here with exciting new features and updates. Whether you're strengthening security, improving observability, or bridging old legacy systems with modern event systems, like Kafka, Axual 2025.1 is built to keep you, your fellow developers, and engineers ahead of the game.