On this page

This article by Axual founder, Jeroen van Disseldorp, discusses the use of Apache Kafka’s Streams API for sending alerts to Rabobank customers.

RABOBANK AIMS TO LEAD

With more than 900 branches, 48,000 employees and €681bn in assets, Netherlands-based Rabobank provides financial products and services to millions of customers worldwide. A cooperative bank, a socially responsible bank, it aims to be the market leader across all Dutch financial markets. Rabobank is also committed to being a leader in the

world’s agriculture sector.

For the past few years, and partly with data protection compliance in mind, Rabobank has actively invested in becoming a real-time, event-driven bank. Anyone familiar with banking processes would understand that this is a giant step. Many banking processes are implemented as batch jobs on not-so-commodity hardware, so the migration task is immense. But Rabobank has taken up this challenge and defined the Business Event Bus (BEB) as where business events from across the organisation are shared between applications. They chose Apache Kafka as the main engine and wrote their own BEB client library to help application developers with features like easy message producing/consuming and disaster recovery.

Rabobank uses an Active-Active Kafka setup, which mirrors Kafka clusters in multiple data centres symmetrically. In the event of data centre failure or operator intervention, BEB clients—including the Kafka Streams-based applications discussed here—may be switched over from one Kafka cluster to another without requiring a restart. This allows for 24×7 continued operations during disaster scenarios and planned maintenance windows. The BEB client library implements the switching mechanisms for producers, consumers and streams applications.

Rabo Alerts is a system formed by microservices that produce, consume and/or stream messages from BEB. All data types and code discussed below are in a GitHub repository. This post contains simplified source code listings (e.g., removing unused fields), but the listings still reflect the actual code running in production.

RABO ALERTS

The Rabo Alerts service alerts Rabobank customers whenever certain financial events occur. One example of a simple event is when a certain amount is debited from or credited to your account. But there are also more complex events. Customers can configure alerts based on their preferences and send them via three channels: email, SMS and mobile push notifications. Rabo Alerts has been in production for more than ten years and is available to millions of account holders.

CHALLENGES

Rabo Alerts originally resided on mainframe systems. All processing steps were batch-oriented, where the mainframe would derive alerts to send every couple of minutes or only a few times a day, depending on the alert type. The implementation was stable and reliable, but there were two issues: lack of flexibility and speed.

There was little flexibility for adapting to new business requirements because changing the supported alerts or adding new (and smarter) alerts required a lot of effort. Rabobank’s introduction of new features in its online environment has accelerated rapidly in the past few years, meaning that the inflexible alerting solution was becoming increasingly problematic.

Speed of alert delivery was also an issue because it could take the original implementation from between five minutes and five hours to deliver alerts to customers (depending on the alert type and batch execution windows). This might have been fast enough ten years ago, but today’s customer expectations are far higher! The time frame in which Rabobank can give the customer ‘relevant information’ is far narrower today than it was then.

The question was how to redesign the existing mechanism to become faster and more extensible. And of course, the redesigned Rabo Alerts would also need to be robust and stable to serve its current user base of millions of customers effectively.

STARTING SMALL

We have been redesigning and reimplementing the alerting mechanisms using Kafka deployment and Kafka’s Streams API for the past year. Since the entire Rabo Alerts service is quite large, we decided to start with four easy but heavily used alerts:

- Balance Above Threshold

- Balance Below Threshold

- Credited Above Threshold

- Debited Above Threshold

Each of these alerts can arise from the stream of payment details from the Current Account systems. Customers can enable these alerts and configure their threshold per alert. For instance: “send me an SMS when my balance drops below €100”, or “send me a push message when someone credits me more than €1,000” (often used for salary deposit notifications).

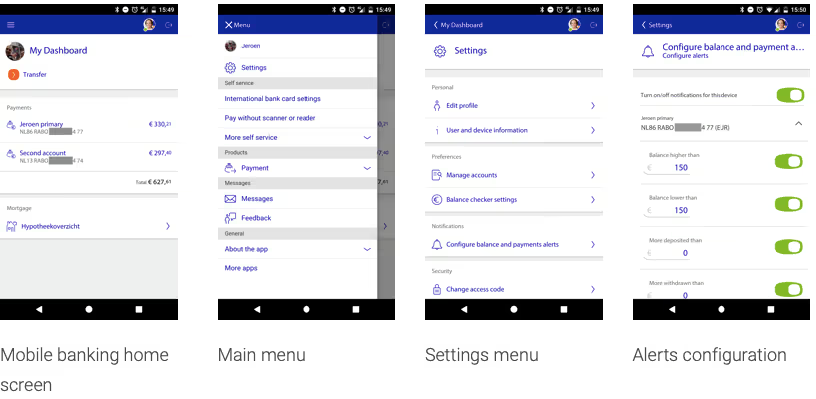

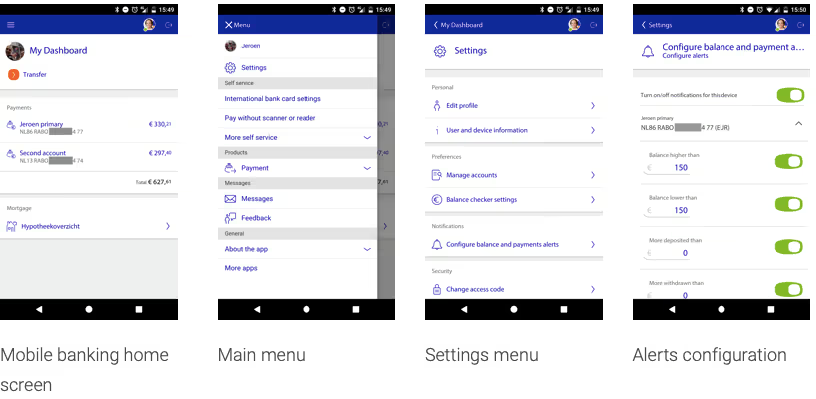

Here are some screenshots that illustrate how to configure Rabo Alerts through the mobile banking app.

ALERTING TOPOLOGY

Our first step was to redesign the alerting process. The basic flow is:

- Tap into the stream of transactions from the payment factory, producing a stream of account entries. A payment transaction always consists of two account entries, a Debit booking and a Credit booking.

- For every account entry:

- Translate the account number to a list of customers that have read permissions on the account.

- For every customer:

- See wether the customer has configured Rabo Alerts for a given account number.

- If so, check if this account entry matches the customer’s alert criteria.

- If so, send the customer an alert on configured channels (email, push and SMS).

Step 1 requires a link with the core banking systems that carry out the transactions.

Step 2a requires that we create a lookup table with all customer permissions of all accounts.

Step 2b needs a lookup table containing the Rabo Alert settings for all customers.

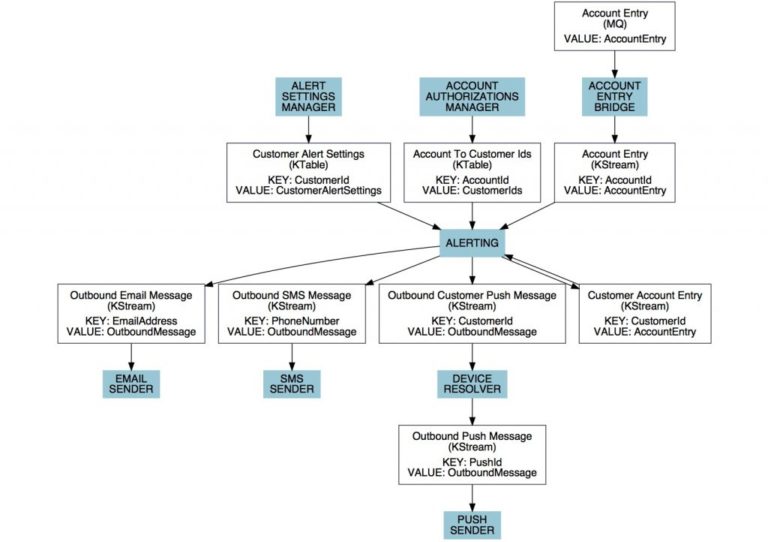

Using this flow and requirements, we drew the following topic flow graph:

All the white boxes are topics in the Kafka process, listing their Avro key/value Kafka data types. Most data types are self-explanatory, but the following are worth mentioning:

- CustomerAlertSettings: Alert settings for a specific customer. These settings contain:

- CustomerAlertAddresses: List of channels and addresses to which customer alerts are sent. Mobile push addresses are represented by CustomerId here since the actual list of registered mobile devices is resolved later in the message sending process.

- CustomerAccountAlertSettings: List of account-specific alert configurations for this customer. The list specifies the alerts the customers want to receive for this account and which thresholds.

- ChannelType: Enumeration of available channel types, currently being EMAIL, PUSH and SMS.

- AccountEntry: One booking on one payment account. An account entry is half of a payment transaction. It’s either the Debit booking on one account or the Credit booking on the other.

- OutboundMessage: The contents of the message sent to a customer. It contains a message type and parameters, but not its addressing. That information is in the key of the Outbound topics.

The blue boxes are standalone applications (also known as ‘microservices’), implemented as runnable jars using Spring Boot and deployed on a managed platform. Together, they

consist of all necessary functionality to implement Rabo Alerts:

- Alert Settings Manager: fills the compaction topic that contains all alert settings per customer.

- Account Authorisation Manager: an account is not tied 1:1 with a customer but may be viewed by different users. For example, shared accounts between spouses or business accounts with different authorisations for employees will have arbitrary account/user authorisation relationships. This application fills a compaction topic that links an account number to authorised customer IDs. It is also in real-time so that changes in authorisations are immediately effectuated in the alerts.

- Account Entry Bridge: retrieves the stream of all payments from Rabobank’s mainframe-based payment factory via IBM MQ and forwards them to a Kafka topic.

- Alerting: core alerting service (see below).

- Device Resolver: an auxiliary application, the Device Resolver, looks up all the customer’s mobile devices from an external system and writes the same message to another topic per device (PushId). This lookup could also be done via another compaction topic, but is implemented using a remote service call for different reasons.

- Senders: each Sender consumes messages from a channel-bound topic and sends them to the addressed customers. Channels are assigned their own Kafka topic to decouple channel failures from one another. This allows alerts to be still sent out via push when the email server is down, for example.

SHOW ME THE CODE

The Kafka Streams code for Alerting consists of only two classes.

The first class is the BalanceAlertsTopology. This class defines the main Kafka Streams topology using a given KStreamBuilder. It implements BEB’s TopologyFactory, a custom interface used by BEB’s client library to generate a new Kafka Streams topology after the application starts or when it is directed to switch over to another Kafka cluster (datacenter switch/failover).

KStream<CustomerId, KeyValue<SpecificRecord, OutboundMessage>> addressedMessages =

builder.<AccountId, AccountEntry>stream(accountEntryStream)

.leftJoin(accountToCustomerIds, (accountEntry, customerIds) -> {

if (isNull(customerIds)) {

return Collections.<KeyValue<CustomerId, AccountEntry>>emptyList();

} else {

return customerIds.getCustomerIds().stream()

.map(customerId -> KeyValue.pair(customerId, accountEntry))

.collect(toList());

}

})

.flatMap((accountId, accountentryByCustomer) -> accountentryByCustomer)

.through(customerIdToAccountEntryStream)

.leftJoin(alertSettings, Pair::with)

.flatMapValues(

(Pair<AccountEntry, CustomerAlertSettings> accountEntryAndSettings) ->

BalanceAlertsGenerator.generateAlerts(

accountEntryAndSettings.getValue0(),

accountEntryAndSettings.getValue1())

);

// Send all Email messages from addressedMessages

addressedMessages

.filter((e, kv) -> kv.key instanceof EmailAddress)

.map((k, v) -> v)

.to(emailMessageStream);

// Send all Sms messages from addressedMessages

addressedMessages

.filter((e, kv) -> kv.key instanceof PhoneNumber)

.map((k, v) -> v)

.to(smsMessageStream);

// Send all Push messages from addressedMessages

// (CustomerId is later resolved to a list of customer's mobile devices)

addressedMessages

.filter((e, kv) -> kv.key instanceof CustomerId)

.map((k, v) -> v)

.to(customerPushMessageStream);

The topology defines several steps:

- Lines 1-13 start with consuming from the account entry stream. When an account entry is retrieved, we look up which customers have access to that specific account. The result is stored in an intermediate topic, using

CustomerIdas key andAccountEntryas the value. The semantics of the topic are defined as “for this customer (key) this account entry (value) needs processing”. - Lines 14-20 are executed per customer. We look up the customer’s alert settings and ask a helper class to generate

OutboundMessagesif this account entry matches the customer’s interests. - Lines 22-39 take the list of all

OutboundMessages, separates them into topics per channel.

The magic of alert generation is implemented in the BalanceAlertsGenerator helper class, called from line 17. Its main method is generateAlerts(), which gets an account entry and alert settings from an authorized customer to view the account. Here’s the code:

public static List<KeyValue<SpecificRecord, OutboundMessage>> generateAlerts(AccountEntry accountEntry,

CustomerAlertSettings settings) {

/* Generates addressed alerts for an AccountEntry, using the alert settings with the following steps:

* 1) Settings are for a specific account, drop AccountEntries not for this account

* 2) Match each setting with all alerts to generate appropriate messages

* 3) Address the generated messages

*/

if (settings == null) {

return new ArrayList<>();

}

return settings.getAccountAlertSettings().stream()

.filter(accountAlertSettings -> matchAccount(accountEntry, accountAlertSettings))

.flatMap(accountAlertSettings -> accountAlertSettings.getSettings().stream())

.flatMap(accountAlertSetting -> Stream.of(

generateBalanceAbove(accountEntry, accountAlertSetting),

generateBalanceBelow(accountEntry, accountAlertSetting),

generateCreditedAbove(accountEntry, accountAlertSetting),

generateDebitedAbove(accountEntry, accountAlertSetting))

)

.filter(Optional::isPresent).map(Optional::get)

.flatMap(messageWithChannels -> mapAddresses(messageWithChannels.getValue0(), settings.getAddresses())

.map(address -> KeyValue.pair(address, messageWithChannels.getValue1())))

.collect(toList());

}

The method’s steps:

- Line 13 streams all account related alerts settings (one object per account).

- Line 14 matches the account number from the settings with the account number in the account entry.

- Line 15 starts streaming the individual settings for the account.

- Lines 16-21 constructs the series of messages to be sent, along with a list of channels to send the message to. We use a separate method for every alert type. The result is a stream of

Pair<List, OutboundMessage>. - Line 22 filters out any empty results.

- Lines 23-24 perform the lookup of the customer’s addresses for given channels and return a stream of

KeyValue<address, OutboundMessage>. - Line 25 finally collects all results and output the result as a Java

List.

Other helper methods in the same class are:

matchAccount(): matches an account entry with account alerts settings by comparing the account number and currency.generateBalanceAbove/Below(): generates BalanceAbove/Below alert messages.generateDebited/CreditedAbove(): generates Debited/CreditedAbove alert messages.mapAddresses(): looks up the customer’s alert addresses for a given list of channels.buildMessage(): builds an OutboundMessage.

Apart from a few additional classes to wrap this functionality in a standalone application, that’s all there is to it!

FIRST TEST RUN

After initial rudimentary implementation, we took the setup for a test drive. It was still a question of how fast it would be. Well, it amazed us despite our high expectations! The entire round trip from payment order confirmation to the alert on a mobile device generally takes only one second, and a maximum of two. This round trip includes the time taken by the payment factory (validation of the payment order, transaction processing), so response times may vary depending on the payment factory workload at that moment. The entire alerting chain is typically executed within 120 milliseconds from the moment of an account entry on Kafka until senders messaging customers. In the sending phase, push alerts are fastest, taking only 100-200 milliseconds to arrive on the customer’s mobile device. Email and SMS are slower channels, with messages arriving after 2-4 seconds.

Under the old setup, an alert would typically take from several minutes to several hours. The following video demonstrates the speed of alert delivery using my personal test account. Note that although I use it for testing, this is a standard Rabobank payment account in current use!

Rabo Alerts demo

Answers to your questions about Axual’s All-in-one Kafka Platform

Are you curious about our All-in-one Kafka platform? Dive into our FAQs

for all the details you need, and find the answers to your burning questions.

Rabo Alerts is a service developed by Rabobank that notifies customers about specific financial events, such as transactions in their accounts. It leverages Apache Kafka’s Streams API to process real-time data and send alerts based on customer-defined criteria. Alerts can be sent through various channels, including email, SMS, and mobile push notifications.

The earlier alerting system operated on mainframe systems and processed alerts in batches. This method was inflexible and slow, often taking between five minutes to five hours to deliver alerts. As customer expectations grew for quicker responses and adaptable services, Rabobank recognized the need for a more dynamic and responsive solution.

The switch to Apache Kafka has significantly enhanced the speed and flexibility of the alerting system. The new setup allows alerts to be processed in near real-time, with round-trip times from transaction confirmation to alert delivery often taking only one to two seconds. This transformation has enabled Rabobank to meet modern customer expectations for timely notifications.

Related blogs

Axual 2025.4, the Winter Release, expands on the governance and self-service foundations of 2025.3 with improved KSML monitoring and state management, an enhanced Schema Catalog, and usability improvements across Self-Service and the platform.

Axual 2025.3 release introduces KSML 1.1 integration for automated stream processing deployment, group-based resource filtering for multi-team governance, and experimental MCP Server for AI-driven platform operations. Includes JSON schema support, Protobuf processing (beta), and enhanced audit tracking for enterprise Kafka implementations.

The Axual 2025.2 summer release delivers targeted improvements for enterprise-grade Kafka deployments. In this post, we walk through the latest updates—from enhanced audit tracking and OAuth support in the REST Proxy to smarter stream processing controls in KSML. These features are designed to solve the real-world governance, security, and operational challenges enterprises face when scaling Kafka across teams and systems.